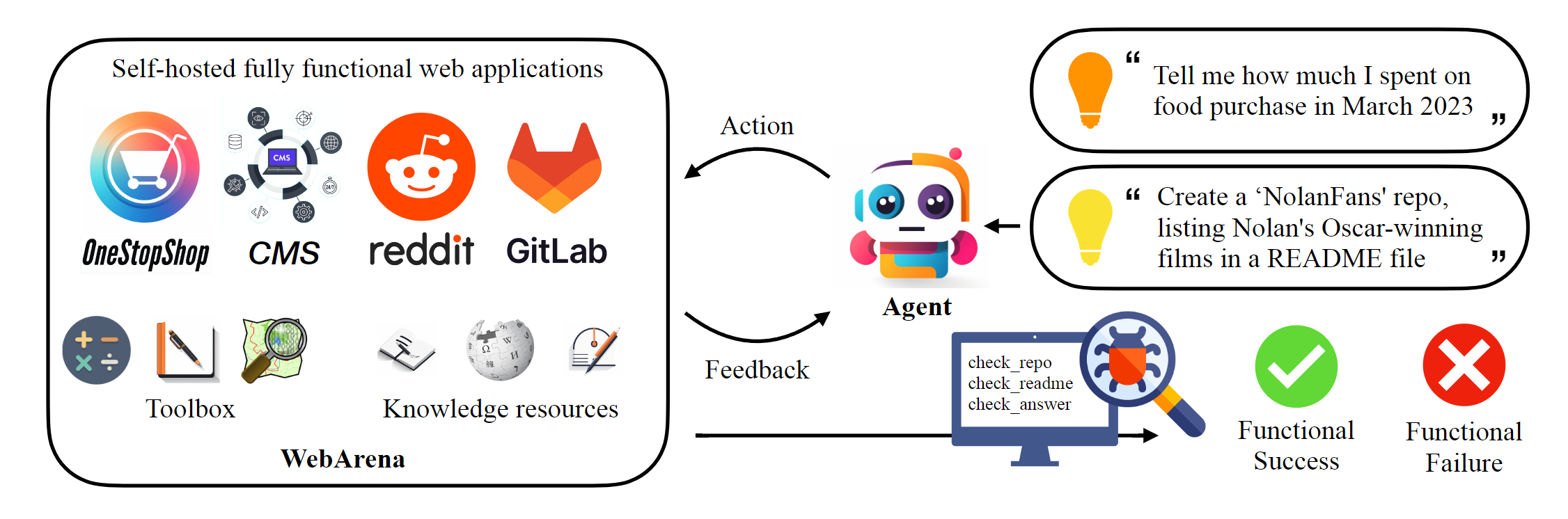

WebArena is a standalone, self-hostable web environment for building autonomous agents

WebArena is a standalone, self-hostable web environment for building autonomous agents

Website • Paper • Leaderboard • TheAgentCompany

## Update on 12/5/2024 > [!IMPORTANT] > This repository hosts the *canonical* implementation of WebArena to reproduce the results reported in the paper. The web navigation infrastructure has been significantly enhanced by [AgentLab](https://github.com/ServiceNow/AgentLab/), introducing several key features: (1) support for parallel experiments using [BrowserGym](https://github.com/ServiceNow/BrowserGym), (2) integration of popular web navigation benchmarks (e.g., VisualWebArena) within a unified framework, (3) unified leaderboard reporting, and (4) improved handling of environment edge cases. We strongly recommend using this framework for your experiments. ## News * [12/20/2024] Check out our new benchmark on even more consequential tasks, including terminal use and coding, [TheAgentCompany](https://the-agent-company.com). * [12/21/2023] We release the recording of trajectories performed by human annotators on ~170 tasks. Check out the [resource page](./resources/README.md#12212023-human-trajectories) for more details. * [11/3/2023] Multiple features! * Uploaded newest [execution trajectories](./resources/README.md#1132023-execution-traces-from-our-experiments-v2) * Added [Amazon Machine Image](./environment_docker/README.md#pre-installed-amazon-machine-image) that pre-installed all websites so that you don't have to! * [Zeno](https://zenoml.com/) x WebArena which allows you to analyze your agents on WebArena without pain. Check out this [notebook](./scripts/webarena-zeno.ipynb) to upload your own data to Zeno, and [this](https://hub.zenoml.com/project/9db3e1cf-6e28-4cfc-aeec-1670cac01872/WebArena%20Tester/explore?params=eyJtb2RlbCI6ImdwdDM1LWRpcmVjdCIsIm1ldHJpYyI6eyJpZCI6NzQ5MiwibmFtZSI6InN1Y2Nlc3MiLCJ0eXBlIjoibWVhbiIsImNvbHVtbnMiOlsic3VjY2VzcyJdfSwiY29tcGFyaXNvbk1vZGVsIjoiZ3B0NC1jb3QiLCJjb21wYXJpc29uQ29sdW1uIjp7ImlkIjoiYTVlMDFiZDUtZTg0NS00M2I4LTllNDgtYTU4NzRiNDJjNjNhIiwibmFtZSI6ImNvbnRleHQiLCJjb2x1bW5UeXBlIjoiT1VUUFVUIiwiZGF0YVR5cGUiOiJOT01JTkFMIiwibW9kZWwiOiJncHQzNS1kaXJlY3QifSwiY29tcGFyZVNvcnQiOltudWxsLHRydWVdLCJtZXRyaWNSYW5nZSI6WzAsMV0sInNlbGVjdGlvbnMiOnsibWV0YWRhdGEiOnt9LCJzbGljZXMiOltdLCJ0YWdzIjpbXX19) page for browsing our existing results! * [10/24/2023] We re-examined the whole dataset and fixed the spotted annotation bugs. The current version ([v0.2.0](https://github.com/web-arena-x/webarena/releases/tag/v0.2.0)) is relatively stable and we don't expect major updates on the annotation in the future. The new results with better prompts and the comparison with human performance can be found in our [paper](https://arxiv.org/abs/2307.13854) * [8/4/2023] Added the instructions and the docker resources to host your own WebArena Environment. Check out [this page](environment_docker/README.md) for details. * [7/29/2023] Added [a well commented script](minimal_example.py) to walk through the environment setup. ## Install ```bash # Python 3.10+ conda create -n webarena python=3.10; conda activate webarena pip install -r requirements.txt playwright install pip install -e . # optional, dev only pip install -e ".[dev]" mypy --install-types --non-interactive browser_env agents evaluation_harness pip install pre-commit pre-commit install ``` ## Quick Walkthrough Check out [this script](minimal_example.py) for a quick walkthrough on how to set up the browser environment and interact with it using the demo sites we hosted. This script is only for education purpose, to perform *reproducible* experiments, please check out the next section. In the nutshell, using WebArena is very similar to using OpenAI Gym. The following code snippet shows how to interact with the environment. ```python from browser_env import ScriptBrowserEnv, create_id_based_action # init the environment env = ScriptBrowserEnv( headless=False, observation_type="accessibility_tree", current_viewport_only=True, viewport_size={"width": 1280, "height": 720}, ) # prepare the environment for a configuration defined in a json file config_file = "config_files/0.json" obs, info = env.reset(options={"config_file": config_file}) # get the text observation (e.g., html, accessibility tree) through obs["text"] # create a random action id = random.randint(0, 1000) action = create_id_based_action(f"click [id]") # take the action obs, _, terminated, _, info = env.step(action) ``` ## End-to-end Evaluation > [!IMPORTANT] > To ensure the correct evaluation, please setup your own WebArena websites following step 1 and step 2. The demo sites are only for browsing purpose to help you better understand the content. After evaluating the 812 examples, reset the environment to the initial state following the instructions [here](./environment_docker/README.md#environment-reset). 1. Setup the standalone environment. Please check out [this page](environment_docker/README.md) for details. 2. Configurate the urls for each website. ```bash export SHOPPING="